Everything You Need to Know About Directed Acyclic Graphs (DAGS)

Directed acyclic graphs are a general category in graph theory, computer science and mathematics which essentially constitute a topological ordering where vertices (e.g., a nodes, tasks or events) are coupled with edges (directed arrows, dependency relationships or transactions) in an asynchronous fashion (nodes cannot loop back to themselves but the flow goes in one direction, i.e. directed).

DAGs are applied in modeling many kinds of information where collections of events must be represented in how they influence each other (probabilistic structures in Bayesian networks, records of historical data, distributed revision control systems, etc.)

This departures from the paradigm of blockchain technology in that the blockchain fabric operates by chaining flat sequences of lists and grouping them in blocks. With blockchain, every block references the one before and including it, which leads to bottlenecks when too many transactions begin arriving too frequently.

This makes it difficult to achieve consensus on valid blocks (the notorious scalability issue). In a DAG structured environment there is no theoretical limit on transaction throughput as transactions are directly linked rather than grouped and serialized on a single lane.

The DAG technical design also allows for a broader range of algorithms which could be applied and thus broader flexibility. There are a few DAG-based projects out there, all very different from each other and noteworthy, all having built their codebase from scratch (as opposed to another Bitcoin codebase hack).

Here are some common properties they share:

- Acyclicity: Time flows in one direction. Newer transactions reference older ones, but not the other way around. In cycles group of tasks depend on each other (if there were cycles there wouldn't be topological ordering). In a DAG, every node depends on previous ones referencing it. This allows that transactions can be executed locally or even off-line and processed, confirmed or finalized at later points.

- Latency: Speed of execution and confirmation times are not constrained by block-size, but bandwidth between communicating peers. There is no theoretical limit to how much the system can scale.

- Feeless (“pre-mined”): Fixed supply, no mining involved. Every transaction issuer is simultaneously a validator, or otherwise there are representatives or witnesses involved in cases of conflicts or disputes. This enables feeless micro and nano transactions limiting environmental impact.

- Zero-value transactions: E.g. messages, or non-value transactions whether or not requiring digital signatures and fitting in a UDP packet.

- Database pruning: Called pruning in Nano and snapshotting in IOTA. No such mechanism yet with Byteballs. It allows for keeping database slim and different nodes can save only the history they are interested in or is relevant to them.

DAG technology has already been used in a number of cryptocurrencies as developers actively look for alternatives to the current blockchain architecture. Below we will look into the underlying technology of three of the most well known DAG based cryptocurrencies.

Nano

Nano (formerly Raiblocks) began in December 2014 (founder Colin LeMahieu spearheading development of the core protocol) when the white paper and beta implementation were first published, making it one of the first DAG-based cryptocurrencies.

A pure currency focused on delivering reliable, quick peer-to-peer payments and rapid exchange transfers for arbitrage, Nano "does one thing and does it right" (a motto reflective of the KISS principle).

Nano makes use of a peculiar architecture (termed block-lattice) which in a way resembles an inside out Lightning Network. In other words, rather than keeping the jurisdiction of a global blockchain which is then branched in side-chains, Nano is already a network topology where each account instead has its own blockchain (account-chain).

Each of these are equivalent to the account’s transaction/balance history and each account-chain can only be updated by the account’s owner. This also makes it everybody's responsibility which other blockchains it chooses to transact and do business with.

This a key design feature in Nano where run-time agreement is replaced with design-time agreement where everyone agrees via signature checking that only an account owner can modify their own chain.

Nano's minimalist approach seems to align with the norms of the UNIX philosophy as summarized by Doug McIlroy in the Bell System Technical Journal thus:

- Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new "features".

- Expect the output of every program to become the input to another, as yet unknown, program. Don't clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don't insist on interactive input.

- Design and build software, even operating systems, to be tried early, ideally within weeks. Don't hesitate to throw away the clumsy parts and rebuild them.

- Use tools in preference to unskilled help to lighten a programming task, even if you have to detour to build the tools and expect to throw some of them out after you've finished using them.

Overview of The Nano Protocol

Sticking to the UNIX philosophy, the Nano protocol is extremely light-weight, befitting the required minimum UDP transmission packet size. The User Datagram Protocol is used to rapidly communicate very short messages ensuring only the integrity of the data. It is capable of running on low-power or legacy hardware with minimal resources aiming to be ideal for practical everyday use (to buy coffee with rather than store value in).

Consensus in Nano is achieved by users choosing representative accounts to cast votes in the event of a arising dispute. Consensus voting is triggered only in the event of malicious transactions and representative nodes with higher account balances are weighted more favorably.

This gives incentives to Nano holders to participate in maintaining the integrity of the ledger. Similarly, in the event of a fork, the genesis needs to be redone and from there everything is redistributed. This is what makes such occurrences rather unlikely. Representatives are explained in more detail in the documentation and a list of representatives can be found here.

Among the contributing developers (Nano is largely community driven) is also the PayPal software engineer Daniel Brain who built a simple checkout for Nano that makes it quick and easy for merchants to implement. Additionally, bigger merchants can set up their own nodes for handling transaction loads.

Regarding supply, Nano is "pre-mined" in that the initial genesis account contains the total fixed balance (of 133,248,290) which is then distributed between other accounts making up the topology of the DAGchain.

The genesis balance is kept in cold storage in a safety deposit box and blocks move from the genesis to a landing account every once in a week as to minimize the number of live undistributed blocks. The distribution at the beginning took place via a public faucet where one has to solve captchas as Proof-of-Work (PoW) an anti-spam mechanism.

Interestingly, much of the early captcha-solving was performed by Venezuelans (the spike of searches from Venezuela can seen in Google Trends) which led to a lot of the distributed XRB going in the hands of some of the poorest which would have otherwise had no access to crypto.

Byteball

Byteball (Bytes) is another DAG-based cryptocurrency developed by Anton Churyumov (graduate of Russian Research Nuclear University) that launched in December 25th 2016. Byteball focuses on conditional payments and human-readable contracts performing simple actions in an interactive way (i.e., human readable smart contracts and agreements via attestation chatbots and on-chain oracles).

Ethereum smart contracts in contrast are more complex and programmer-readable, aiming at strict business logic of the institutional kind while Byteball is intended for more immediate everyday use.

The data stored on Byteball's DAG allows users to secure each other's data by attaching it to previous data units created by other users and fees are proportional to the amount of resources consumed, in this case equal to the transaction size in bytes. This barrier to entry is Byteball's anti-spam mechanism and roughly reflects the utility of storage for the user and the cost of storage for the network.

The Byteball native currency is also bytes and part of the fee goes to the network overseers called witnesses. One pays a fixed amount of 1 byte of the currency for storing 1 byte of transaction data.

The witnesses are publicly identifiable individuals with real-world identity that stamp each transaction ensuring the integrity of the main chain. One can choose among them from the wallet interface the same way one selects his representatives in the Nano wallet. There are 12 witnesses involved in every transaction that occurs.

The wallet also allows for sending Bytes to e-mail accounts or via chat apps like WhatsApp or Telegram. BlackBytes is the complementary currency used for fully anonymous and untraceable p2p transactions.

IOTA

IOTA was originally born from a hardware initiative (JINN) aiming at producing a ternary-based microprocessor providing the hardware support for general distributed computing as the cornerstone foundation for connected IoT devices and eventually leveraging AI technologies.

JINN was first announced in September 23rd, 2014 on the NXT forum and subsequently evolved into IOTA leading to the founding of the IOTA Foundation as a Berlin-based non-profit in 2017.

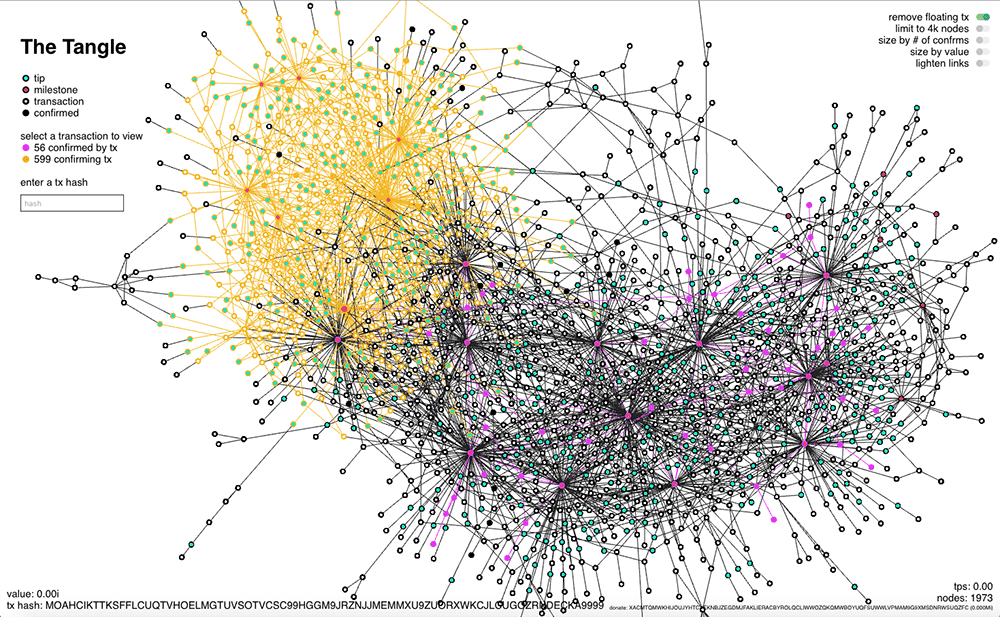

The foundation is dedicated to developing industrial standards and open protocols necessary for the IoT infrastructure as the backbone for a machine-to-machine economy. In that endeavor IOTA's DAG (called 'the tangle') is fundamentally different from other cryptocurrencies and cannot be conceived of in the same manner or measured by the same standards.

The necessarily unorthodox approach IOTA adopts is relevant precisely to the state of affairs in the IoT today and the problems faced with the massive proliferation of vulnerabilities among connected devices which have quadrupled in 2017 only.

IOTA’s tangle constitutes an abstract machinery of a rhizomatic kind, “ceaselessly establishing connections between semiotic chains”. Serguei Popov, a Moscow University Mathematics Ph.D. and one of the core founders of the IOTA project published a follow up paper on the 12th of May.

This analysed the game theoretical aspects of "selfish" players within the tangle, demonstrating in simulations the existence of an "almost symmetric" Nash equilibria in the dynamics of how the tangle is intended to function. In other words how "selfish" players will nevertheless cooperate with the network choosing attachment strategies close or similar to the "recommended" ones.

The IOTA Coordinator (Coo)

In terms of the protocol coordination between the Coo and the entering nodes is a two-way process. As the Coo issues the zero-valued milestone transactions in the same manner in which the nodes, in a sense, police the Coo in following the set rules of cooperation by synchronizing transactions with those milestones.

IOTA's DAG-valued tangle spawns a stochastic space of randomized "inconsistency" on the surface. It will then run probability distribution samplings in a training-based model for achieving network-wide consistency.

This is done in an ultimately self-sustainable mesh of opportunistic networks which connect and interact potentially in commonly shared mechanisms and protocol defined principles. This is actually by situational necessity or demand for particular resource as sub-tangles can dynamically detach and re-attach to the main tangle.

In the tangle's unique data structure there are no blocks building sequentially on previous blocks of transaction data, but instead every transaction must concatenate two other incoming ones to check and verify. This will couple functions of user and maintainer ("miner") rather than separating roles in heterogeneous contingents whose interests may not always coincide.

This also enables the simultaneous processing of transactions (rather than one-by-one) in a vortex of an ever expanding web of connections which exponentially decreases confirmation times. There are also no backlogs of unconfirmed transactions due to block size constraints.

In IOTA's underlying design rationale speed is favored over consistency while allowing for unlimited scalability (i.e., speed is a function of the size of the network) and consensus is directed as a forward inference of capture-pull-entangle (rather than verify-validate-hash-append) with periodic snapshotting clearing out recycled zero balances.

The tip selection (tip is an incoming transaction) mode for verifying the authenticity of incoming transactions runs them against the entire history of the tangle and as a transaction enters the tangle it does so in branch mode. This means that it will wait to be again selected by the same process as it accumulates trustworthiness and gets embedded deeper within the network.

Establishing Consensus

Using the coordinator, the present definition of consensus is simple: any transaction referenced by a milestone (a zero-valued transaction) is confirmed, and the others are not. This is critically important in the growth phase of the network’s infancy as otherwise an attacker may begin out-pacing the network. While attacking the network, they can start double-spending by referencing their own transactions, building up a parasite sub-tangle and infiltrating the network.

The unique IOTA token in this case acquires its true value only within its own organic circulatory system for which it has been specifically designed. This happens only when the tangle itself is mature enough and capable of organically defending itself from attacks. It will only happen after it accumulated enough critical mass of total weight of referenced transactions to ensure the Markov chain random walks will function as intended.

Markov Chain Monte Carlo methods as such are a category of algorithms used in Bayesian data analysis which compute models requiring integrations over thousands of unknown parameters with respect to complicated, high dimensional probability distributions.

Bayesian data analysis in general has long proven particularly useful in solving complex problems where there is large inherent uncertainty that needs to be quantified. It is potentially the most information efficient method to fit a statistical model, but also the most computationally intensive.

Also interesting to note is that in April 2017, Serguei Popov and Prof. Gideon Samid from Israel's Institute of Technology (and a recently joined IOTA Foundation member) recently published a paper on the subject.

In it, they proposed substituting the pseudo-random complexity of currently used cryptographic hash functions with a more computationally simple and truly random one. This, of course, would only make sense in light of the above mentioned microprocessor and a large enough network (tangle) in the future.

A quick overview of the IOTA protocol can also be found here.

Mineable DAG-Based Currencies

Even though both Nano and IOTA are pre-mined and fixed supply, there are options to mine Monero and get XRBs or iotas in browser (mostly for testing purposes in application development).

There are, however, DAG-based cryptocurrencies which allow for affordable and distributed mining. One such is Burstcoin, a project that began in 2014 deriving from NXT at the time (but also building on its own code base) that ensures cryptographic consistency of transactions via a Proof-of-Capacity consensus algorithm (using inexpensive, low-power hard drives).

Similar to lightning network, Burst constitutes a main blockchain for absolute storage/book-keeping and branching general purpose DAG structured transaction channels (a layer called Dymaxion) for propagation and verification which are then validated into the main chain.

Each channel can be opened with custom parameters and properties, such as defined duration, own meta-currency backed by Burst, degree of anonymity, network size, etc.

There is a public faucet provided and Burst is tradeable on Poloniex and Bittrex.

Dagger (XDAG) is another such recently launched project (mainnet was deployed on January 5, 2018) which allows for CPU/GPU mining and somewhat similar to other such architectures, couples blocks, transactions and addresses in a single unit.

Summary

Overall, all three DAG ecosystems are sufficiently different from each other in their goals, aims and utility despite some overlaps. However, they do share some common properties. One of the often raised criticisms is that DAGs are "centralized".

It is important to reflect a little and define the semantics of what words imply in different contexts (decentralized, distributed, etc.). We should then examine all the components of a network infrastructure and then consider which elements are centralized, decentralized, or what functions are delegated where, etc. and how all this ties together.

All three DAG projects can be said to be decentralizable, as that property depends on wider usage and adoption and thus individuals and parties willing to assume certain roles. Presently, Nano is organized around a handful of representative hubs, Byteball depends on a handful of witnesses to oversee the main chain and IOTA is run/trained from a Central Coordinator (the IOTA Foundation) which ensures activity flows according to the protocol.

The incentives with DAGs are not so much the immediate profit derived from PoW mining, but rather a substantial cost saving incentive which naturally attracts certain companies and businesses who have vested interest in the networks as they solve a business problem for them.

Disclaimer: These are the writer’s opinions and should not be considered investment advice. Readers should do their own research.